With so much information out there on image quality, accuracy, frames per second, focal points, distortion, AI algorithms, and more—it’s tough to understand which inspection drone is right for your needs. This blog should clear some of the fog and help you see the big picture.

It’s pretty obvious why we are loving drones for visual inspection. They can maneuver, hover, and hold—making inspections safer, more efficient, and more thorough. New advances in computer vision have made it even possible to inspect large scale sites autonomously, especially when you need precise monitoring for those critical assets and infrastructure.

Here’s the thing. A drone is only as good as the data it collects. Without high quality imaging, you can’t perform accurate inspections or make reliable decisions. By catching minute faults or problems early on, you can prevent downtime for your business, improve safety, and even cut the risk of environmental damage.

With so many options, buzzwords, and parameters for image quality out there, we wrote a tech deep dive to demystify the decision-making for autonomous visual inspection solutions. It goes through some essential background information, introduces the ins and outs of accuracy and quality for visual inspection, and then zeroes in on image quality and why it’s important. This blog takes you through some of the deep dive’s highlights, but you’re welcome to read it all to learn more.

Clearing up image quality myths

Before we get started, you’ll want to keep two things in mind. 1) There is no single answer or parameter that sets the benchmark for image quality. 2) Good quality is not just a matter of camera features. It ultimately depends on what you need the image for. Although a camera’s resolution is expressed in megapixels, the resolution of an imaging system has to include a spatial metric. And the more details the better. Let’s take a closer look at why to clear up some common misconceptions.

Myth: Lower GSD means better inspection

Truth: Although GSD can precisely calculate the level of detail detected, you can still have the same GSD for a very crisp image and a very blurry one.

Ground sampling distance (GSD), representing the difference between the center of two pixels, is the most common statistic noted in discussions of resolution. A 1 cm GSD means that every pixel reflects one centimeter down on the ground. In short, the same camera flying at a low altitude will have a smaller (i.e., better) GSD than it would at a high altitude.

Although GSD can precisely calculate the level of detail that can be detected, you can still have the same GSD for a very crisp image and a very blurry one. This is because GSD doesn’t take into account diffractions, defocus, aberrations, distortion, lens curvature, motion blur, and other artifacts.

Myth: More megapixels means better quality images

Truth: Too many megapixels can harm a camera’s performance. The sensor size is more important when it comes to getting sharper details.

The photo resolution reflects the tradeoff between field of view (FOV) and GSD. A higher resolution means that we can either increase FOV without compromising GSD, or increase GSD without compromising FOV–or even increase both.

In short, by itself, photo resolution doesn’t reflect the amount of details or the quality of the image. That said, if you’ve got the right optical system, you can get a high level of detail and a large field of view. In the commercial drone industry, the standard photo resolution ranges from about 12MP–including the retired Percepto Sparrow, Skydio 2+ for Enterprise, and DJI mini 2–to 24MP for the Percepto Air Max, and can go up to about 48MP as it does for the DJI Mavic 2 Enterprise Advanced.

Myth: The more lines per centimeter, the better the system

Truth: Higher spatial resolution means a smaller area is photographed, and you’ll need more flight time to cover the same area.

Spatial resolution, measured in line pairs per centimeter (lp/cm), describes the amount of distinguishable details captured on a physical surface. This is usually the first and most common measure for the image quality.

But more lines per centimeter also means you’re getting a smaller area photographed, which translates directly into more flight time or more footage needed to cover the same area. To balance this tradeoff, we calibrated our solution to get the best possible detail for inspection at close range so that any cracks or anomalies are visible—while ensuring that the same configuration works well for mapping applications, which don’t require as much detail.

Myth: MTF is a precise measurement of contrast detail

Truth: There is no standard way to measure MTF. Each camera sensor has its own MTF chart as does each lens. The lens MTF should match or exceed that of the sensor.

Modulation transfer function (MTF) measures the ability of a lens to differentiate between pixels, and is a good indication for image sharpness. MTF plots the contrast and resolution of a lens from its center to its edges, against a theoretical perfect lens. Of course, this perfect lens doesn’t exist because glass is not 100% transparent and the rounded surface of the lens inevitably creates distortions in the light passing through.

What’s important is that each camera sensor has its own MTF chart. If the MTF of the lens is lower than the MTF of the camera, the lens will be the limiting factor. Ideally, you want the MTF of the lens to be on a par, or even higher, than the MTF of the camera–so you get the full benefit of the camera’s capabilities.

The ingredients for superior image quality

Although we already looked at four important and well-known parameters for image quality, there are other less-known factors that, together, impact the viability of inspection solutions.

Superior detail separation

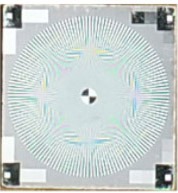

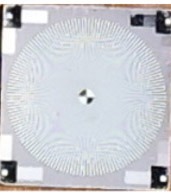

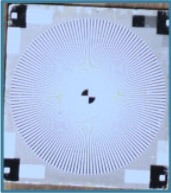

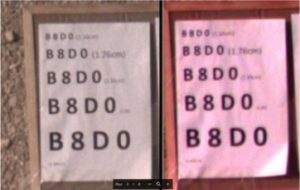

Detail separation isn’t about the best looking image. It’s the one in which the smallest faults can be detected at an early stage, and from a distance. A more robust algorithm with high detail separation will help you catch issues earlier on. In the figure below, you can see the results of a test we did to measure the detail separation for four different drone sensors. The superior detail separation of the black and white lines at the edge of pad for the Air Max is quite obvious. In contrast, the lack of detail separation for the same lines is almost non-existent for the Mavic-E.

|

|

|

|

|

DJI Mavic – E |

DJI Phantom 4 |

DJI M300 |

Percepto Air Max |

Figure 2: Differences in detail separation at 20m distance

White balance

White balance is the process that adjusts the color ‘temperature’ of your data captures so they match what our eyes would see. Having a sophisticated software solution for white balance will make problem detection easier and lower the rate of false alarms.

Figure 3: White balance helps ensure colors appear as seen by the human eye

Best lens for the job

Each lens has specific distances at which it performs best, based on its MTF, distortion, focal distance, and some other parameters. While a lens can be calibrated for a certain depth of field, it can’t be set for multiple distances. Images taken beyond the range established for the lens, will have lower image quality. You’ll want a system that has the best lens and lens calibration for your use case.

Optimized software parameters

Although we don’t often think about it, each drone solution has to take the raw pixels collected and transform them to compose an image. This process, known as mosaicking or Debayer, should ideally run at the edge in real-time on the drone’s GPU. Having a powerful feedback loop between the camera and software, makes it possible for the drone to continuously adjust its position and direction to get the best image, while the system automatically chooses the exposure and gain that will produce an image with the lowest amount of noise, lowest blur, and maximum amount of recognizable detail.

Getting the right drone for your business

Although there is no one size fits all solution when it comes to drone inspection, you can still get the best in class image quality available in today’s marketplace. By giving top priority to our lens configuration, image quality, and software algorithms, Percepto drones deliver best in class detail separation and therefore detailed, high-quality change detection. Read the complete tech deep dive to learn more about what image quality is right for your use cases, become familiar with the different terms for image quality, and make an educated decision when it comes to the best fit for your operations.